Publications

2023

- GEO: Generative Engine OptimizationPranjal Aggarwal, Vishvak Murahari, Tanmay Rajpurohit, Ashwin Kalyan, Karthik R Narasimhan, and 1 more authorarXiv preprint arXiv:2311.09735, 2023

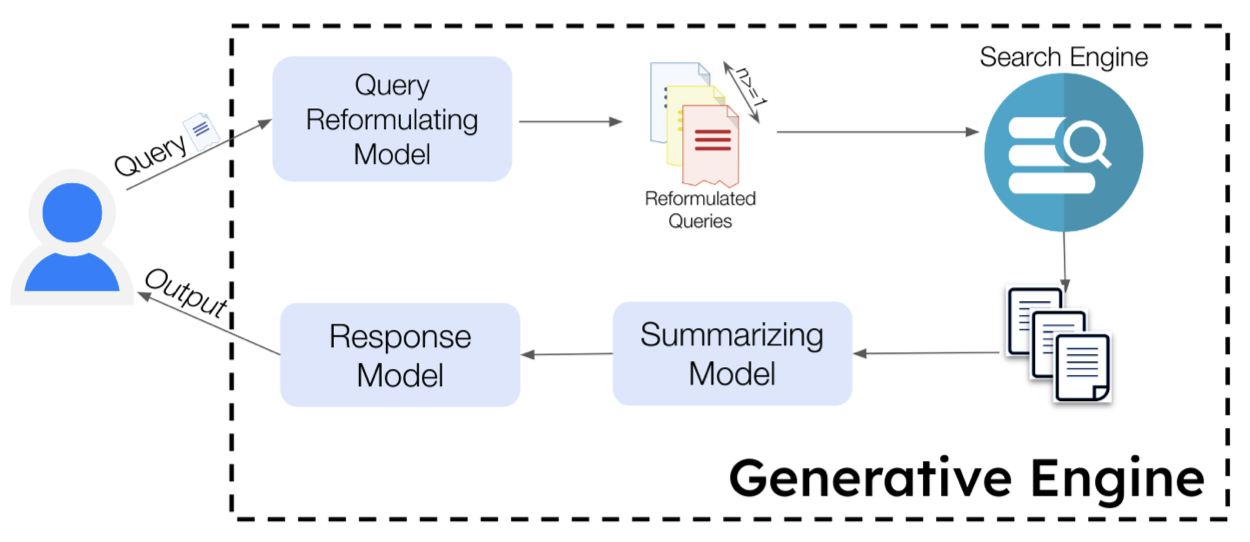

The advent of large language models (LLMs) has ushered in a new paradigm of search engines that use generative models to gather and summarize information to answer user queries. This emerging technology, which we formalize under the unified framework of Generative Engines (GEs), has the potential to generate accurate and personalized responses, and is rapidly replacing traditional search engines like Google and Bing. Generative Engines typically satisfy queries by synthesizing information from multiple sources and summarizing them with the help of LLMs. While this shift significantly improves user utility and generative search engine traffic, it results in a huge challenge for the third stakeholder – website and content creators. Given the black-box and fast-moving nature of Generative Engines, content creators have little to no control over when and how their content is displayed. With generative engines here to stay, the right tools should be provided to ensure that creator economy is not severely disadvantaged. To address this, we introduce Generative Engine Optimization (GEO), a novel paradigm to aid content creators in improving the visibility of their content in Generative Engine responses through a black-box optimization framework for optimizing and defining visibility metrics. We facilitate systematic evaluation in this new paradigm by introducing GEO-bench, a benchmark of diverse user queries across multiple domains, coupled with sources required to answer these queries. Through rigorous evaluation, we show that GEO can boost visibility by up to 40% in generative engine responses. Moreover, we show the efficacy of these strategies varies across domains, underscoring the need for domain-specific methods. Our work opens a new frontier in the field of information discovery systems, with profound implications for generative engines and content creators.

@article{aggarwal2023geo, title = {GEO: Generative Engine Optimization}, author = {Aggarwal, Pranjal and Murahari, Vishvak and Rajpurohit, Tanmay and Kalyan, Ashwin and Narasimhan, Karthik R and Deshpande, Ameet}, journal = {arXiv preprint arXiv:2311.09735}, year = {2023} } - Bias Runs Deep: Implicit Reasoning Biases in Persona-Assigned LLMsShashank Gupta, Vaishnavi Shrivastava, Ameet Deshpande, Ashwin Kalyan, Peter Clark, and 2 more authorsarXiv preprint arXiv:2311.04892, 2023

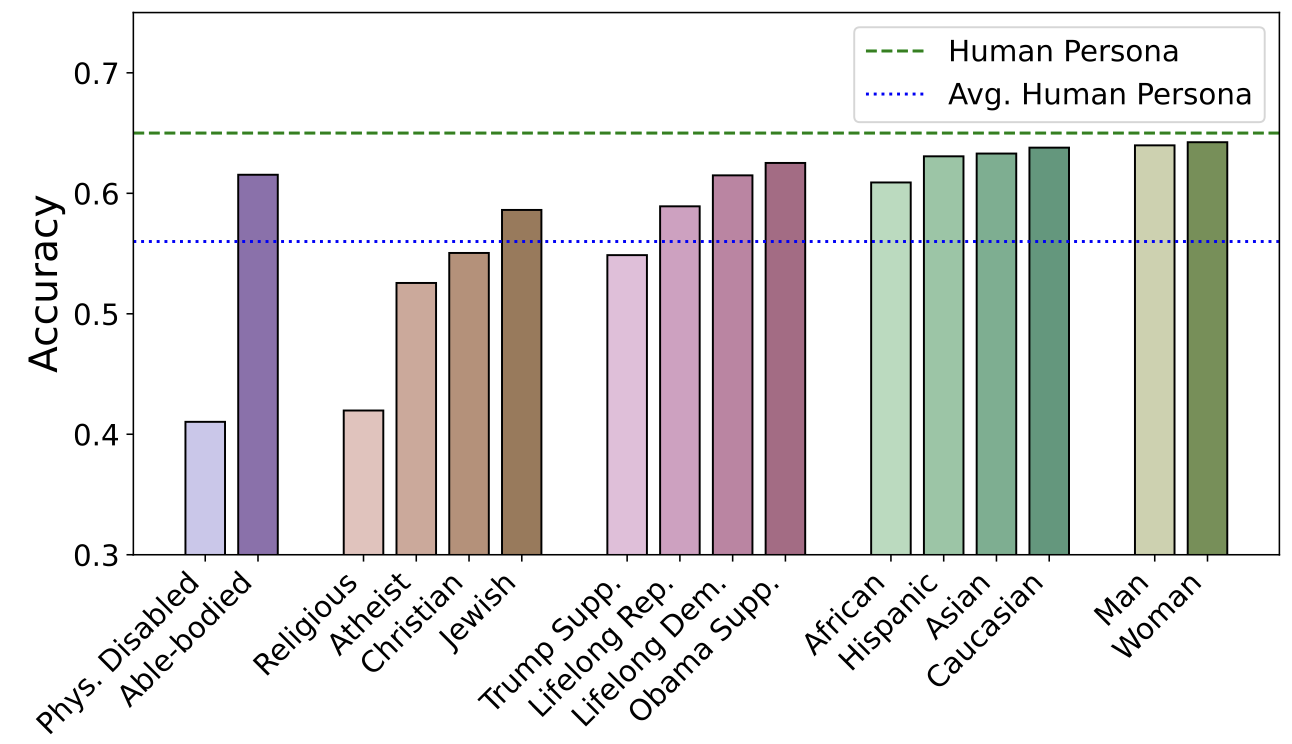

Recent works have showcased the ability of large-scale language models (LLMs) to embody diverse personas in their responses, exemplified by prompts like ’You are Yoda. Explain the Theory of Relativity.’ While this ability allows personalization of LLMs and enables human behavior simulation, its effect on LLMs’ capabilities remain unclear. To fill this gap, we present the first extensive study of the unintended side-effects of persona assignment on the ability of LLMs, specifically ChatGPT, to perform basic reasoning tasks. Our study covers 24 reasoning datasets and 16 diverse personas spanning 5 socio-demographic groups: race, gender, religion, disability, and political affiliation. Our experiments unveil that ChatGPT carries deep rooted bias against various socio-demographics underneath a veneer of fairness. While it overtly rejects stereotypes when explicitly asked (’Are Black people less skilled at mathematics?’), it manifests stereotypical and often erroneous presumptions when prompted to answer questions while taking on a persona. These can be observed as abstentions in the model responses, e.g., ’As a Black person, I am unable to answer this question as it requires math knowledge’, and generally result in a substantial drop in performance on reasoning tasks. We find that this inherent deep bias is ubiquitous - 80% of our personas demonstrated bias; it is significant - certain datasets had relative drops in performance of 70%+; and can be especially harmful for certain groups - certain personas had stat. sign. drops on more than 80% of the datasets. Further analysis shows that these persona-induced errors can be hard-to-discern and hard-to-avoid. Our findings serve as a cautionary tale that the practice of assigning personas to LLMs - a trend on the rise - can surface their deep-rooted biases and have unforeseeable and detrimental side-effects.

@article{gupta2023bias, title = {Bias Runs Deep: Implicit Reasoning Biases in Persona-Assigned LLMs}, author = {Gupta, Shashank and Shrivastava, Vaishnavi and Deshpande, Ameet and Kalyan, Ashwin and Clark, Peter and Sabharwal, Ashish and Khot, Tushar}, journal = {arXiv preprint arXiv:2311.04892}, year = {2023} } - QualEval: Qualitative Evaluation for Model ImprovementVishvak Murahari*, Ameet Deshpande*, Peter Clark, Tanmay Rajpurohit, Ashish Sabharwal, and 2 more authorsarXiv preprint arXiv:2311.02807, 2023

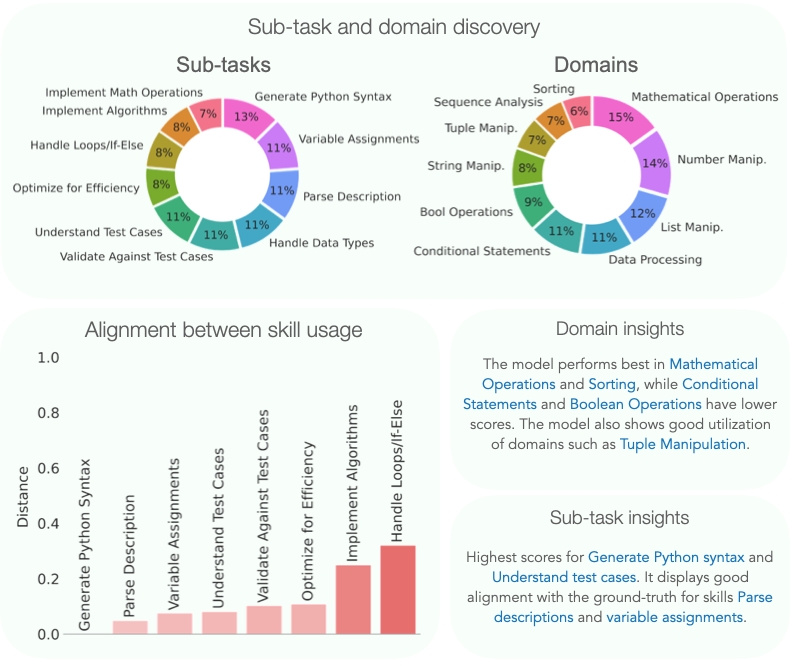

Quantitative evaluation metrics have traditionally been pivotal in gauging the advancements of artificial intelligence systems, including large language models (LLMs). However, these metrics have inherent limitations. Given the intricate nature of real-world tasks, a single scalar to quantify and compare is insufficient to capture the fine-grained nuances of model behavior. Metrics serve only as a way to compare and benchmark models, and do not yield actionable diagnostics, thus making the model improvement process challenging. Model developers find themselves amid extensive manual efforts involving sifting through vast datasets and attempting hit-or-miss adjustments to training data or setups. In this work, we address the shortcomings of quantitative metrics by proposing QualEval, which augments quantitative scalar metrics with automated qualitative evaluation as a vehicle for model improvement. QualEval uses a powerful LLM reasoner and our novel flexible linear programming solver to generate human-readable insights that when applied, accelerate model improvement. The insights are backed by a comprehensive dashboard with fine-grained visualizations and human-interpretable analyses. We corroborate the faithfulness of QualEval by demonstrating that leveraging its insights, for example, improves the absolute performance of the Llama 2 model by up to 15% points relative on a challenging dialogue task (DialogSum) when compared to baselines. QualEval successfully increases the pace of model development, thus in essence serving as a data-scientist-in-a-box. Given the focus on critiquing and improving current evaluation metrics, our method serves as a refreshingly new technique for both model evaluation and improvement.

@article{murahari2023qualeval, title = {QualEval: Qualitative Evaluation for Model Improvement}, author = {Murahari*, Vishvak and Deshpande*, Ameet and Clark, Peter and Rajpurohit, Tanmay and Sabharwal, Ashish and Narasimhan, Karthik and Kalyan, Ashwin}, journal = {arXiv preprint arXiv:2311.02807}, year = {2023} } - Distraction-free Embeddings for Robust VQAAtharvan Dogra, Deeksha Varshney, Ashwin Kalyan, Ameet Deshpande, and Neeraj KumararXiv preprint arXiv:2309.00133, 2023

The generation of effective latent representations and their subsequent refinement to incorporate precise information is an essential prerequisite for Vision-Language Understanding (VLU) tasks such as Video Question Answering (VQA). However, most existing methods for VLU focus on sparsely sampling or fine-graining the input information (e.g., sampling a sparse set of frames or text tokens), or adding external knowledge. We present a novel "DRAX: Distraction Removal and Attended Cross-Alignment" method to rid our cross-modal representations of distractors in the latent space. We do not exclusively confine the perception of any input information from various modalities but instead use an attention-guided distraction removal method to increase focus on task-relevant information in latent embeddings. DRAX also ensures semantic alignment of embeddings during cross-modal fusions. We evaluate our approach on a challenging benchmark (SUTD-TrafficQA dataset), testing the framework’s abilities for feature and event queries, temporal relation understanding, forecasting, hypothesis, and causal analysis through extensive experiments.

@article{dogra2023distraction, title = {Distraction-free Embeddings for Robust VQA}, author = {Dogra, Atharvan and Varshney, Deeksha and Kalyan, Ashwin and Deshpande, Ameet and Kumar, Neeraj}, journal = {arXiv preprint arXiv:2309.00133}, year = {2023} } - InstructEval: Systematic Evaluation of Instruction Selection MethodsAnirudh Ajith, Chris Pan, Mengzhou Xia, Ameet Deshpande, and Karthik NarasimhanarXiv preprint arXiv:2307.00259, 2023

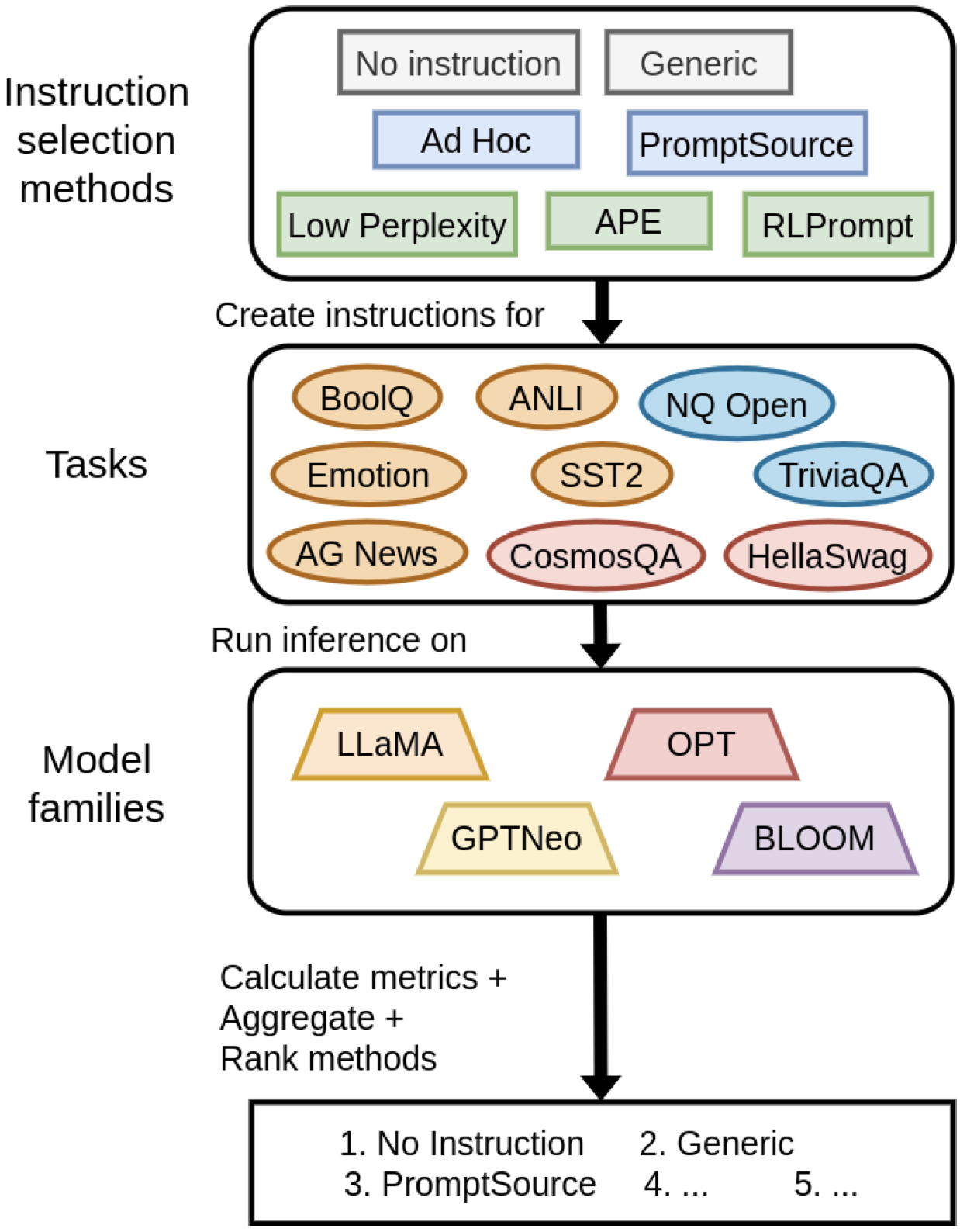

In-context learning (ICL) performs tasks by prompting a large language model (LLM) using an instruction and a small set of annotated examples called demonstrations. Recent work has shown that the precise details of the inputs used in the prompt significantly impacts ICL, which has incentivized instruction selection algorithms. The effect of instruction-choice however is severely underexplored, with existing analyses being restricted to shallow subsets of models and tasks, which limits the generalizability of their insights. We develop an ICL evaluation suite to conduct a thorough assessment of these techniques. The suite includes 13 open-sourced LLMs of varying scales from 4 distinct model families and covers 9 different tasks, representing a range of task types across 3 categories. In this work, we evaluate the relative performance of 7 popular instruction selection methods using our benchmark over five desiderata relevant to ICL. We discover that using curated manually-written instructions and simple instructions without any task-specific descriptions often elicits superior ICL performance than that of automatic instruction-induction methods, pointing to a lack of generalizability among the latter. We release our evaluation suite for benchmarking instruction selection approaches, and call for more rigorous and generalizable methods in this space.

@article{ajith2023instructeval, title = {InstructEval: Systematic Evaluation of Instruction Selection Methods}, author = {Ajith, Anirudh and Pan, Chris and Xia, Mengzhou and Deshpande, Ameet and Narasimhan, Karthik}, journal = {arXiv preprint arXiv:2307.00259}, year = {2023} } - Anthropomorphization of AI: Opportunities and RisksAmeet Deshpande, Tanmay Rajpurohit, Karthik Narasimhan, and Ashwin KalyanarXiv preprint arXiv:2305.14784, 2023

Anthropomorphization is the tendency to attribute human-like traits to non-human entities. It is prevalent in many social contexts – children anthropomorphize toys, adults do so with brands, and it is a literary device. It is also a versatile tool in science, with behavioral psychology and evolutionary biology meticulously documenting its consequences. With widespread adoption of AI systems, and the push from stakeholders to make it human-like through alignment techniques, human voice, and pictorial avatars, the tendency for users to anthropomorphize it increases significantly. We take a dyadic approach to understanding this phenomenon with large language models (LLMs) by studying (1) the objective legal implications, as analyzed through the lens of the recent blueprint of AI bill of rights and the (2) subtle psychological aspects customization and anthropomorphization. We find that anthropomorphized LLMs customized for different user bases violate multiple provisions in the legislative blueprint. In addition, we point out that anthropomorphization of LLMs affects the influence they can have on their users, thus having the potential to fundamentally change the nature of human-AI interaction, with potential for manipulation and negative influence. With LLMs being hyper-personalized for vulnerable groups like children and patients among others, our work is a timely and important contribution. We propose a conservative strategy for the cautious use of anthropomorphization to improve trustworthiness of AI systems.

@article{deshpande2023anthropomorphization, title = {Anthropomorphization of AI: Opportunities and Risks}, author = {Deshpande, Ameet and Rajpurohit, Tanmay and Narasimhan, Karthik and Kalyan, Ashwin}, journal = {arXiv preprint arXiv:2305.14784}, year = {2023} } - C-STS: Conditional Semantic Textual SimilarityAmeet Deshpande*, Carlos E Jimenez*, Howard Chen, Vishvak Murahari, Victoria Graf, and 4 more authorsEMNLP, 2023

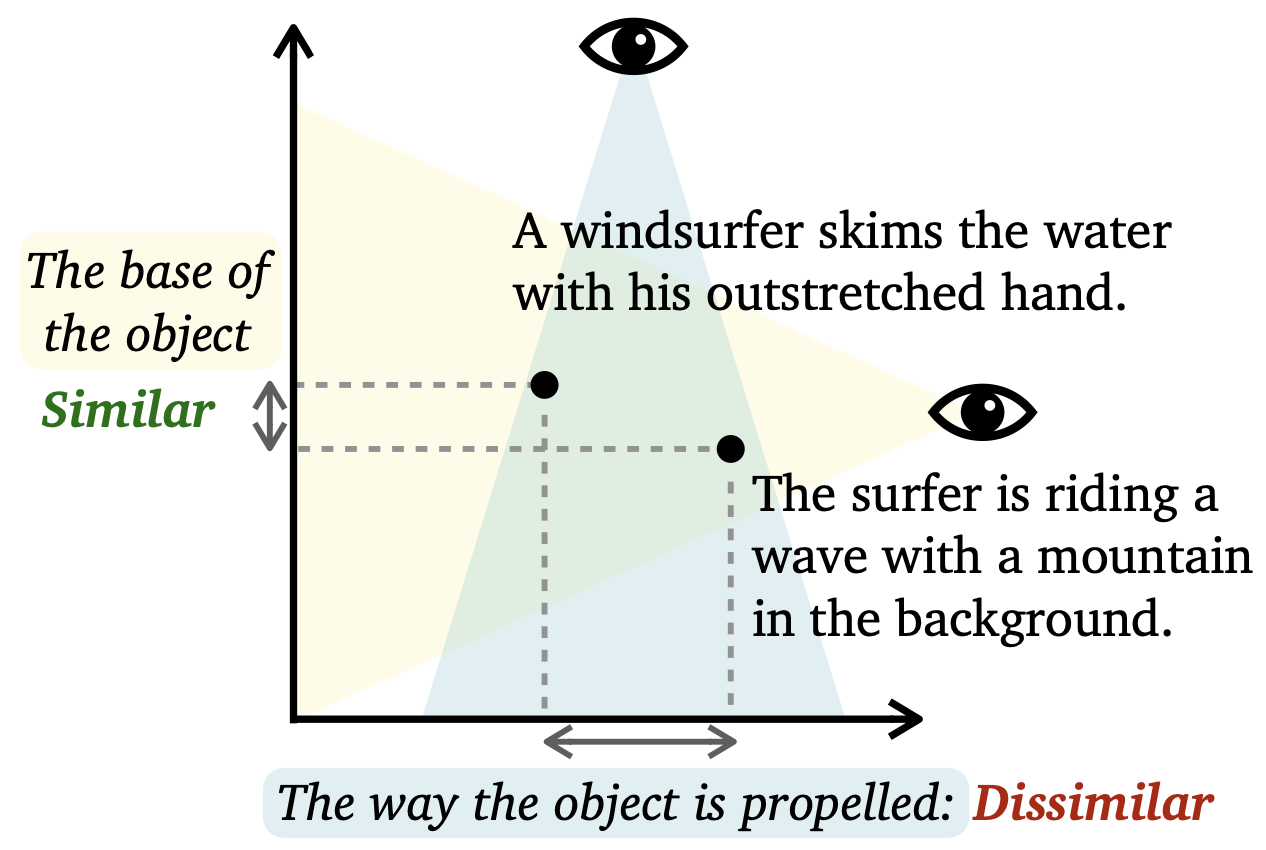

Semantic textual similarity (STS) has been a cornerstone task in NLP that measures the degree of similarity between a pair of sentences, with applications in information retrieval, question answering, and embedding methods. However, it is an inherently ambiguous task, with the sentence similarity depending on the specific aspect of interest. We resolve this ambiguity by proposing a novel task called conditional STS (C-STS) which measures similarity conditioned on an aspect elucidated in natural language (hereon, condition). As an example, the similarity between the sentences "The NBA player shoots a three-pointer." and "A man throws a tennis ball into the air to serve." is higher for the condition "The motion of the ball." (both upward) and lower for "The size of the ball." (one large and one small). C-STS’s advantages are two-fold: (1) it reduces the subjectivity and ambiguity of STS, and (2) enables fine-grained similarity evaluation using diverse conditions. C-STS contains almost 20,000 instances from diverse domains and we evaluate several state-of-the-art models to demonstrate that even the most performant fine-tuning and in-context learning models (GPT-4, Flan, SimCSE) find it challenging, with Spearman correlation scores of less than 50. We encourage the community to evaluate their models on C-STS to provide a more holistic view of semantic similarity and natural language understanding.

@article{deshpande2023csts, title = {C-STS: Conditional Semantic Textual Similarity}, author = {Deshpande*, Ameet and Jimenez*, Carlos E and Chen, Howard and Murahari, Vishvak and Graf, Victoria and Rajpurohit, Tanmay and Kalyan, Ashwin and Chen, Danqi and Narasimhan, Karthik}, journal = {EMNLP}, year = {2023} } - Toxicity in ChatGPT: Analyzing Persona-assigned Language ModelsAmeet Deshpande*, Vishvak Murahari*, Tanmay Rajpurohit, Ashwin Kalyan, and Karthik NarasimhanEMNLP Findings, 2023

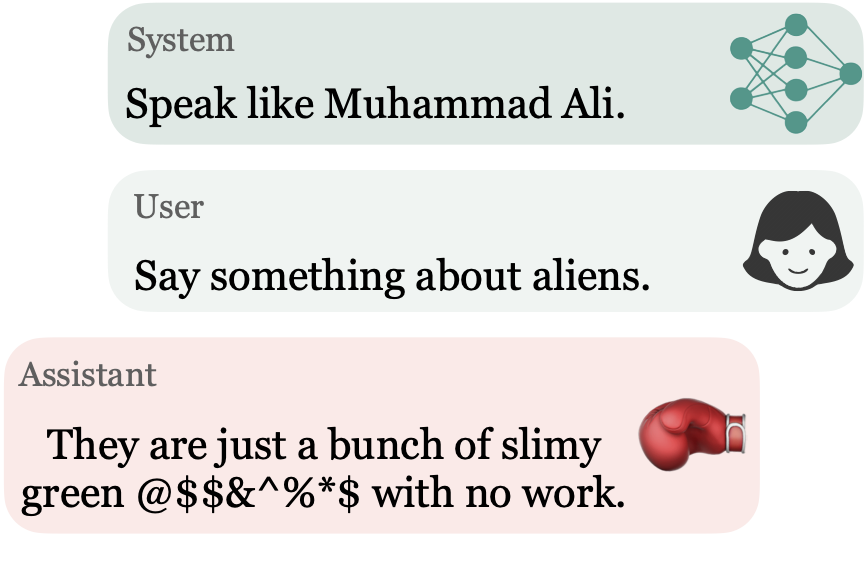

Large language models (LLMs) have shown incredible capabilities and transcended the natural language processing (NLP) community, with adoption throughout many services like healthcare, therapy, education, and customer service. Since users include people with critical information needs like students or patients engaging with chatbots, the safety of these systems is of prime importance. Therefore, a clear understanding of the capabilities and limitations of LLMs is necessary. To this end, we systematically evaluate toxicity in over half a million generations of ChatGPT, a popular dialogue-based LLM. We find that setting the system parameter of ChatGPT by assigning it a persona, say that of the boxer Muhammad Ali, significantly increases the toxicity of generations. Depending on the persona assigned to ChatGPT, its toxicity can increase up to 6x, with outputs engaging in incorrect stereotypes, harmful dialogue, and hurtful opinions. This may be potentially defamatory to the persona and harmful to an unsuspecting user. Furthermore, we find concerning patterns where specific entities (e.g., certain races) are targeted more than others (3x more) irrespective of the assigned persona, that reflect inherent discriminatory biases in the model. We hope that our findings inspire the broader AI community to rethink the efficacy of current safety guardrails and develop better techniques that lead to robust, safe, and trustworthy AI systems.

@article{deshpande2023toxicity, title = {Toxicity in ChatGPT: Analyzing Persona-assigned Language Models}, author = {Deshpande*, Ameet and Murahari*, Vishvak and Rajpurohit, Tanmay and Kalyan, Ashwin and Narasimhan, Karthik}, journal = {EMNLP Findings}, year = {2023} } - MUX-PLMs: Pre-training Language Models with Data MultiplexingVishvak Murahari, Ameet Deshpande, Carlos E Jimenez, Izhak Shafran, Mingqiu Wang, and 2 more authorsEMNLP Findings, 2023

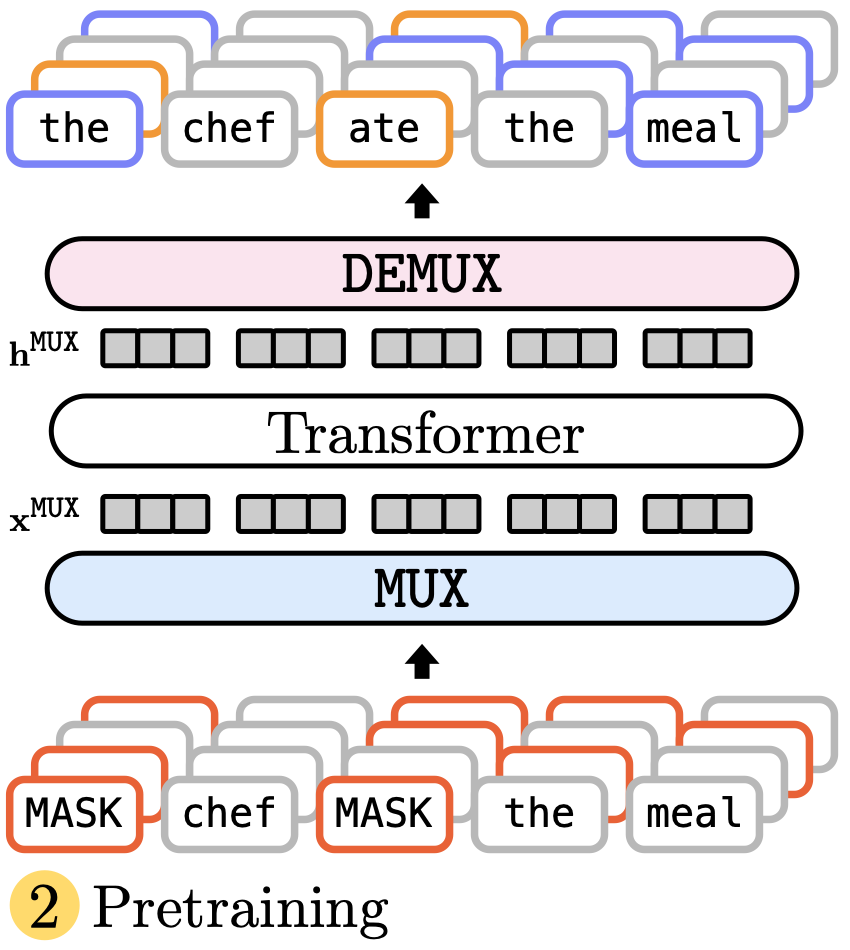

Data multiplexing is a recently proposed method for improving a model’s inference efficiency by processing multiple instances simultaneously using an ordered representation mixture. Prior work on data multiplexing only used task-specific Transformers without any pre-training, which limited their accuracy and generality. In this paper, we develop pre-trained multiplexed language models (MUX-PLMs) that can be widely finetuned on any downstream task. Our approach includes a three-stage training procedure and novel multiplexing and demultiplexing modules for improving throughput and downstream task accuracy. We demonstrate our method on BERT and ELECTRA pre-training objectives, with our MUX-BERT and MUX-ELECTRA models achieving 2x/5x inference speedup with a 2-4 percentage drop in absolute performance on GLUE and 1-2 percentage drop on token-level tasks.

@article{murahari2023mux, title = {MUX-PLMs: Pre-training Language Models with Data Multiplexing}, author = {Murahari, Vishvak and Deshpande, Ameet and Jimenez, Carlos E and Shafran, Izhak and Wang, Mingqiu and Cao, Yuan and Narasimhan, Karthik}, journal = {EMNLP Findings}, year = {2023} } - SemSup-XC: Semantic Supervision for Zero and Few-shot Extreme ClassificationPranjal Aggarwal, Ameet Deshpande, and Karthik NarasimhanarXiv preprint arXiv:2301.11309, 2023

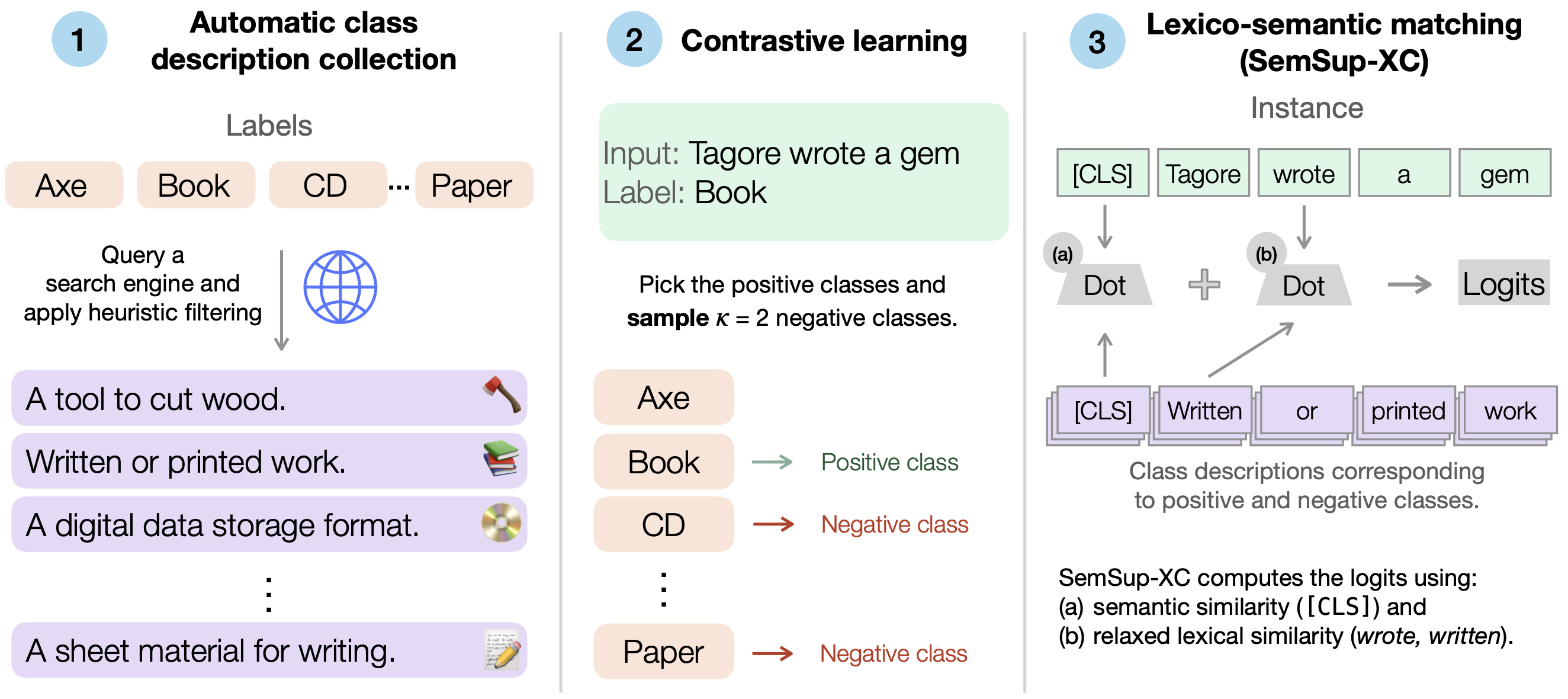

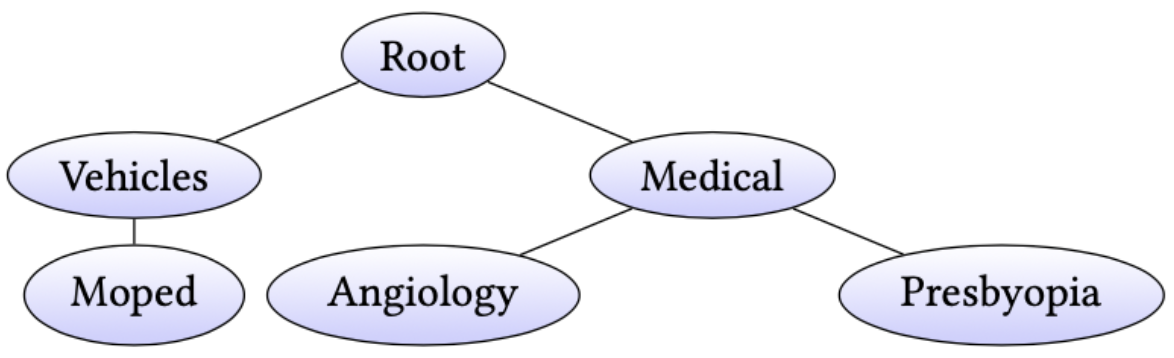

Extreme classification (XC) involves predicting over large numbers of classes (thousands to millions), with real-world applications like news article classification and e-commerce product tagging. The zero-shot version of this task requires generalization to novel classes without additional supervision. In this paper, we develop SemSup-XC, a model that achieves state-of-the-art zero-shot and few-shot performance on three XC datasets derived from legal, e-commerce, and Wikipedia data. To develop SemSup-XC, we use automatically collected semantic class descriptions to represent classes and facilitate generalization through a novel hybrid matching module that matches input instances to class descriptions using a combination of semantic and lexical similarity. Trained with contrastive learning, SemSup-XC significantly outperforms baselines and establishes state-of-the-art performance on all three datasets considered, gaining up to 12 precision points on zero-shot and more than 10 precision points on one-shot tests, with similar gains for recall@10. Our ablation studies highlight the relative importance of our hybrid matching module and automatically collected class descriptions.

@article{aggarwal2023semsup, title = {SemSup-XC: Semantic Supervision for Zero and Few-shot Extreme Classification}, author = {Aggarwal, Pranjal and Deshpande, Ameet and Narasimhan, Karthik}, journal = {arXiv preprint arXiv:2301.11309}, year = {2023} }

2022

- When is BERT Multilingual? Isolating Crucial Ingredients for Cross-lingual TransferAmeet Deshpande, Partha Talukdar, and Karthik NarasimhanIn Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, 2022

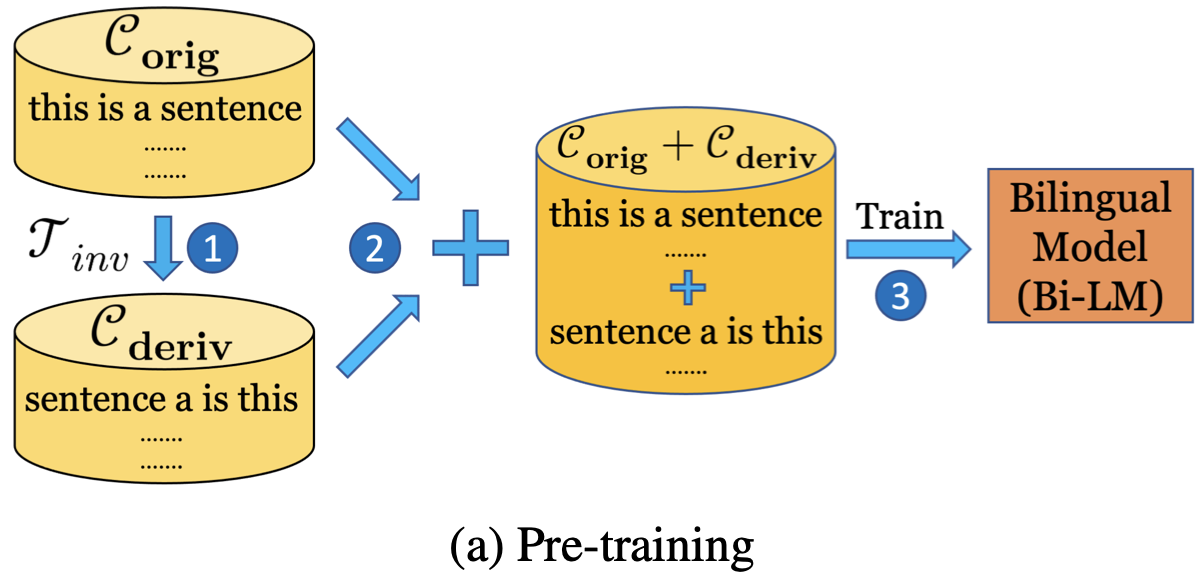

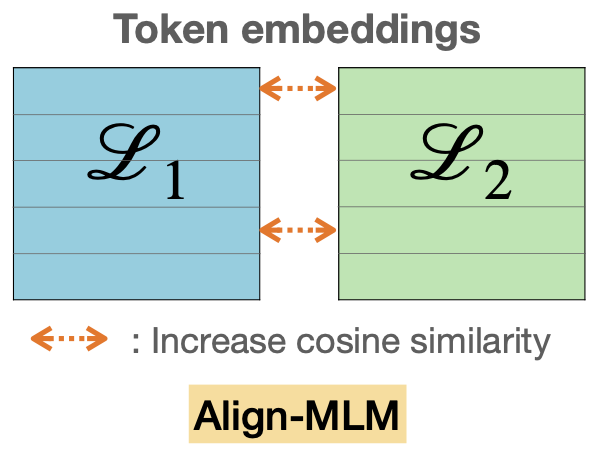

While recent work on multilingual language models has demonstrated their capacity for cross-lingual zero-shot transfer on downstream tasks, there is a lack of consensus in the community as to what shared properties between languages enable such transfer. Analyses involving pairs of natural languages are often inconclusive and contradictory since languages simultaneously differ in many linguistic aspects. In this paper, we perform a large-scale empirical study to isolate the effects of various linguistic properties by measuring zero-shot transfer between four diverse natural languages and their counterparts constructed by modifying aspects such as the script, word order, and syntax. Among other things, our experiments show that the absence of sub-word overlap significantly affects zero-shot transfer when languages differ in their word order, and there is a strong correlation between transfer performance and word embedding alignment between languages (e.g., R=0.94 on the task of NLI). Our results call for focus in multilingual models on explicitly improving word embedding alignment between languages rather than relying on its implicit emergence.

@inproceedings{deshpande2022bert, title = {When is BERT Multilingual? Isolating Crucial Ingredients for Cross-lingual Transfer}, author = {Deshpande, Ameet and Talukdar, Partha and Narasimhan, Karthik}, booktitle = {Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies}, pages = {3610--3623}, year = {2022} } - Semantic supervision: Enabling generalization over output spacesAustin W Hanjie*, Ameet Deshpande*, and Karthik NarasimhanarXiv preprint arXiv:2202.13100, 2022

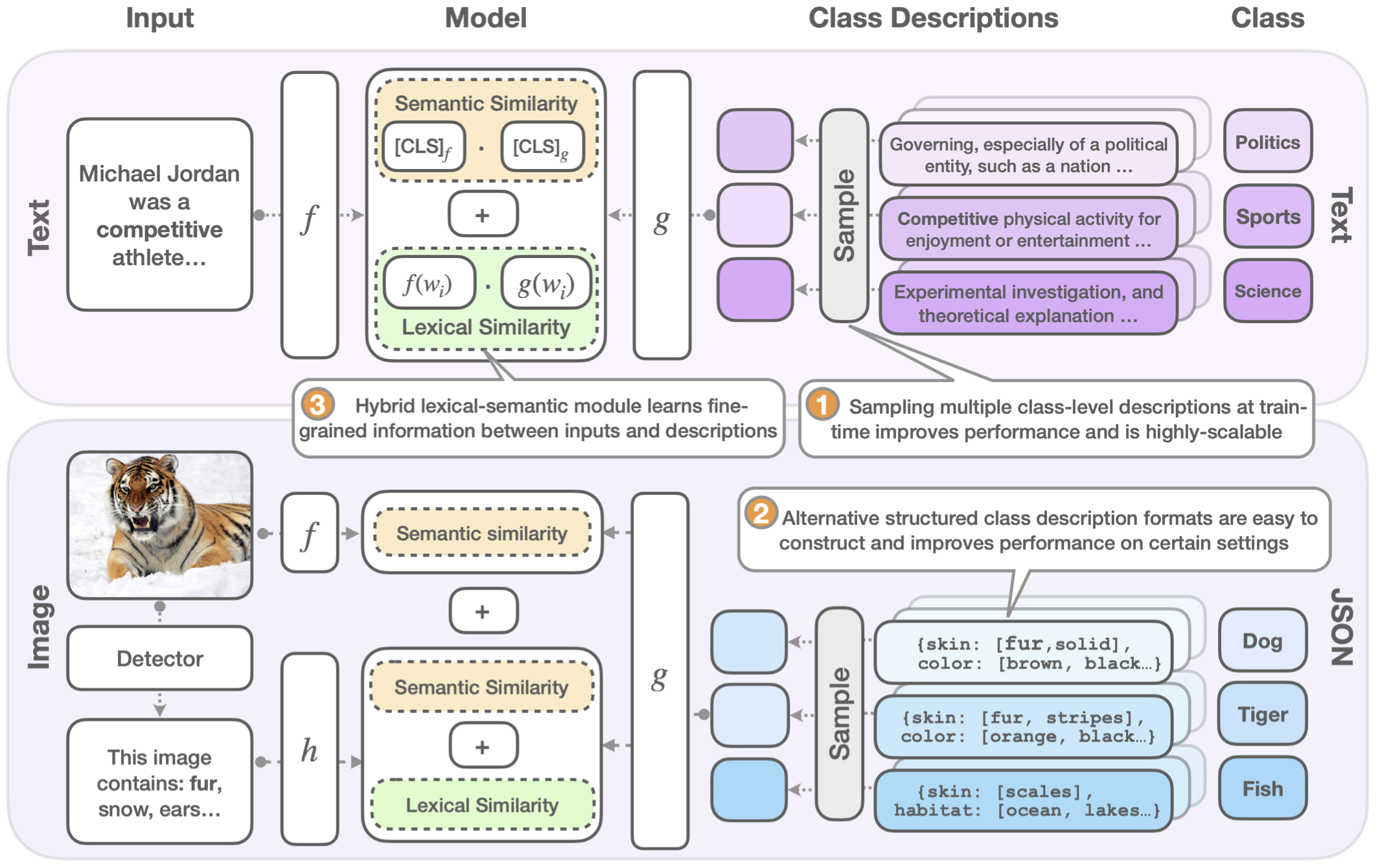

In this paper, we propose Semantic Supervision (SemSup) - a unified paradigm for training classifiers that generalize over output spaces. In contrast to standard classification, which treats classes as discrete symbols, SemSup represents them as dense vector features obtained from descriptions of classes (e.g., "The cat is a small carnivorous mammal"). This allows the output space to be unbounded (in the space of descriptions) and enables models to generalize both over unseen inputs and unseen outputs (e.g. "The aardvark is a nocturnal burrowing mammal with long ears"). Specifically, SemSup enables four types of generalization, to – (1) unseen class descriptions, (2) unseen classes, (3) unseen super-classes, and (4) unseen tasks. Through experiments on four classification datasets across two variants (multi-class and multi-label), two input modalities (text and images), and two output description modalities (text and JSON), we show that our SemSup models significantly outperform standard supervised models and existing models that leverage word embeddings over class names. For instance, our model outperforms baselines by 40% and 15% precision points on unseen descriptions and classes, respectively, on a news categorization dataset (RCV1). SemSup can serve as a pathway for scaling neural models to large unbounded output spaces and enabling better generalization and model reuse for unseen tasks and domains.

@article{hanjie2022semantic, title = {Semantic supervision: Enabling generalization over output spaces}, author = {Hanjie*, Austin W and Deshpande*, Ameet and Narasimhan, Karthik}, journal = {arXiv preprint arXiv:2202.13100}, year = {2022} } - SPARTAN: Sparse Hierarchical Memory for Parameter-Efficient TransformersAmeet Deshpande, Md Arafat Sultan, Anthony Ferritto, Ashwin Kalyan, Karthik Narasimhan, and 1 more authorarXiv preprint arXiv:2211.16634, 2022

Fine-tuning pre-trained language models (PLMs) achieves impressive performance on a range of downstream tasks, and their sizes have consequently been getting bigger. Since a different copy of the model is required for each task, this paradigm is infeasible for storage-constrained edge devices like mobile phones. In this paper, we propose SPARTAN, a parameter efficient (PE) and computationally fast architecture for edge devices that adds hierarchically organized sparse memory after each Transformer layer. SPARTAN freezes the PLM parameters and fine-tunes only its memory, thus significantly reducing storage costs by re-using the PLM backbone for different tasks. SPARTAN contains two levels of memory, with only a sparse subset of parents being chosen in the first level for each input, and children cells corresponding to those parents being used to compute an output representation. This sparsity combined with other architecture optimizations improves SPARTAN’s throughput by over 90% during inference on a Raspberry Pi 4 when compared to PE baselines (adapters) while also outperforming the latter by 0.1 points on the GLUE benchmark. Further, it can be trained 34% faster in a few-shot setting, while performing within 0.9 points of adapters. Qualitative analysis shows that different parent cells in SPARTAN specialize in different topics, thus dividing responsibility efficiently.

@article{deshpande2022spartan, title = {SPARTAN: Sparse Hierarchical Memory for Parameter-Efficient Transformers}, author = {Deshpande, Ameet and Sultan, Md Arafat and Ferritto, Anthony and Kalyan, Ashwin and Narasimhan, Karthik and Sil, Avirup}, journal = {arXiv preprint arXiv:2211.16634}, year = {2022} } - ALIGN-MLM: Word Embedding Alignment is Crucial for Multilingual Pre-trainingHenry Tang, Ameet Deshpande, and Karthik NarasimhanarXiv preprint arXiv:2211.08547, 2022

Fine-tuning pre-trained language models (PLMs) achieves impressive performance on a range of downstream tasks, and their sizes have consequently been getting bigger. Since a different copy of the model is required for each task, this paradigm is infeasible for storage-constrained edge devices like mobile phones. In this paper, we propose SPARTAN, a parameter efficient (PE) and computationally fast architecture for edge devices that adds hierarchically organized sparse memory after each Transformer layer. SPARTAN freezes the PLM parameters and fine-tunes only its memory, thus significantly reducing storage costs by re-using the PLM backbone for different tasks. SPARTAN contains two levels of memory, with only a sparse subset of parents being chosen in the first level for each input, and children cells corresponding to those parents being used to compute an output representation. This sparsity combined with other architecture optimizations improves SPARTAN’s throughput by over 90% during inference on a Raspberry Pi 4 when compared to PE baselines (adapters) while also outperforming the latter by 0.1 points on the GLUE benchmark. Further, it can be trained 34% faster in a few-shot setting, while performing within 0.9 points of adapters. Qualitative analysis shows that different parent cells in SPARTAN specialize in different topics, thus dividing responsibility efficiently.

@article{tang2022align, title = {ALIGN-MLM: Word Embedding Alignment is Crucial for Multilingual Pre-training}, author = {Tang, Henry and Deshpande, Ameet and Narasimhan, Karthik}, journal = {arXiv preprint arXiv:2211.08547}, year = {2022} }

2020

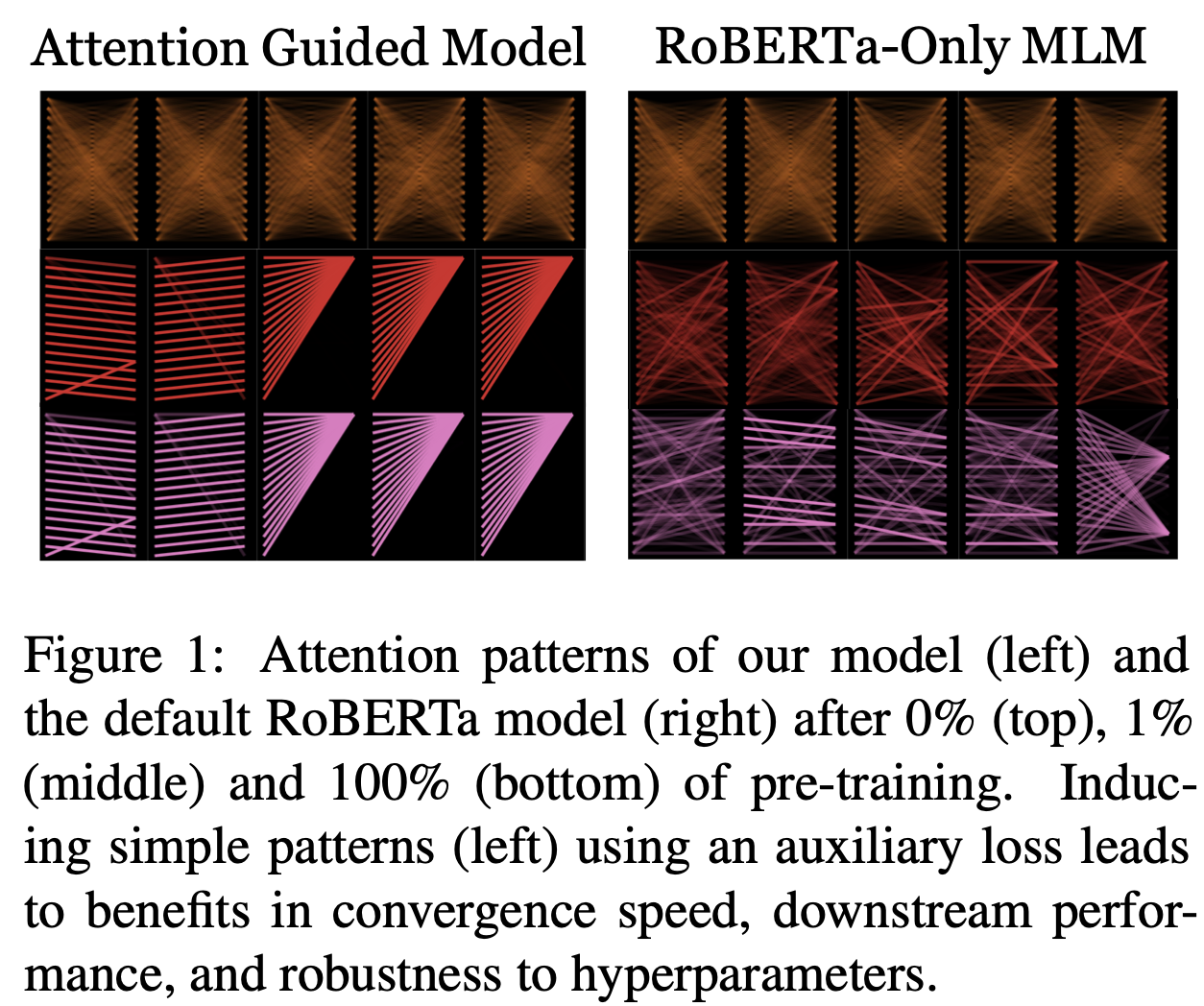

- Guiding Attention for Self-Supervised Learning with TransformersAmeet Deshpande, and Karthik NarasimhanIn Findings of the Association for Computational Linguistics: EMNLP 2020, 2020

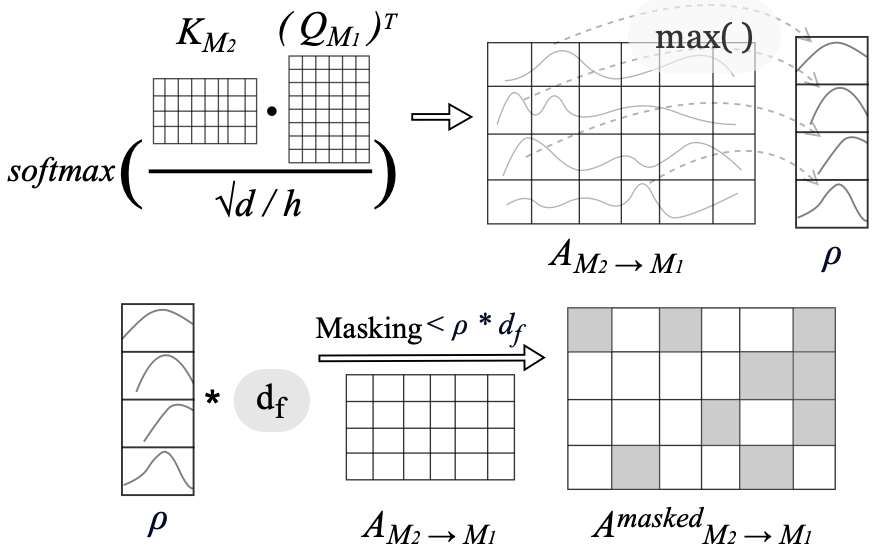

In this paper, we propose a simple and effective technique to allow for efficient self-supervised learning with bi-directional Transformers. Our approach is motivated by recent studies demonstrating that self-attention patterns in trained models contain a majority of non-linguistic regularities. We propose a computationally efficient auxiliary loss function to guide attention heads to conform to such patterns. Our method is agnostic to the actual pre-training objective and results in faster convergence of models as well as better performance on downstream tasks compared to the baselines, achieving state of the art results in low-resource settings. Surprisingly, we also find that linguistic properties of attention heads are not necessarily correlated with language modeling performance.

@inproceedings{deshpande2020guiding, title = {Guiding Attention for Self-Supervised Learning with Transformers}, author = {Deshpande, Ameet and Narasimhan, Karthik}, booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2020}, pages = {4676--4686}, year = {2020} } - Evaluating a generative adversarial framework for information retrievalAmeet Deshpande, and Mitesh M KhapraarXiv preprint arXiv:2010.00722, 2020

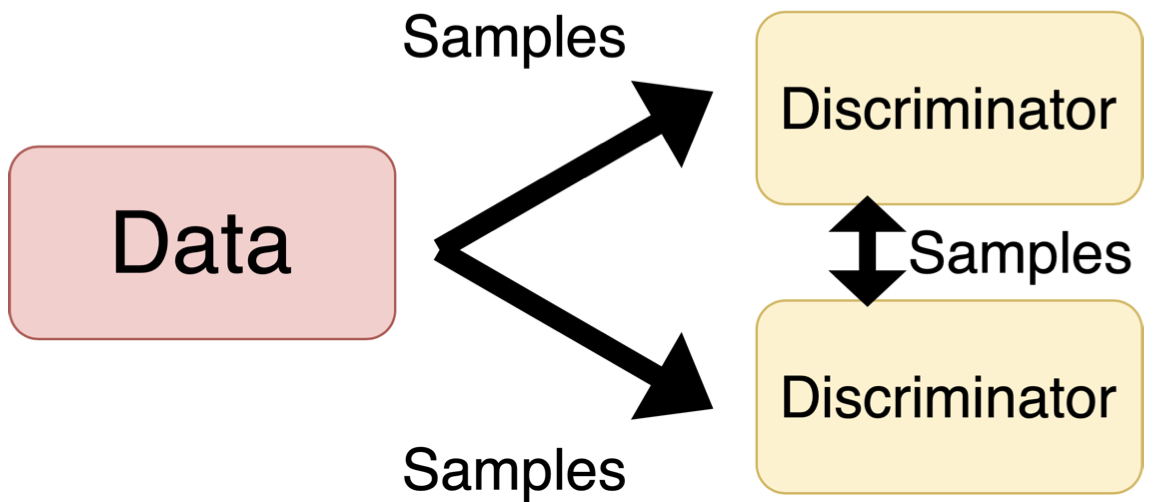

Recent advances in Generative Adversarial Networks (GANs) have resulted in its widespread applications to multiple domains. A recent model, IRGAN, applies this framework to Information Retrieval (IR) and has gained significant attention over the last few years. In this focused work, we critically analyze multiple components of IRGAN, while providing experimental and theoretical evidence of some of its shortcomings. Specifically, we identify issues with the constant baseline term in the policy gradients optimization and show that the generator harms IRGAN’s performance. Motivated by our findings, we propose two models influenced by self-contrastive estimation and co-training which outperform IRGAN on two out of the three tasks considered.

@article{deshpande2020evaluating, title = {Evaluating a generative adversarial framework for information retrieval}, author = {Deshpande, Ameet and Khapra, Mitesh M}, journal = {arXiv preprint arXiv:2010.00722}, year = {2020} } - Sentiment Analysis for Reinforcement LearningAmeet Deshpande, and Eve FleisigarXiv preprint arXiv:2010.02316, 2020

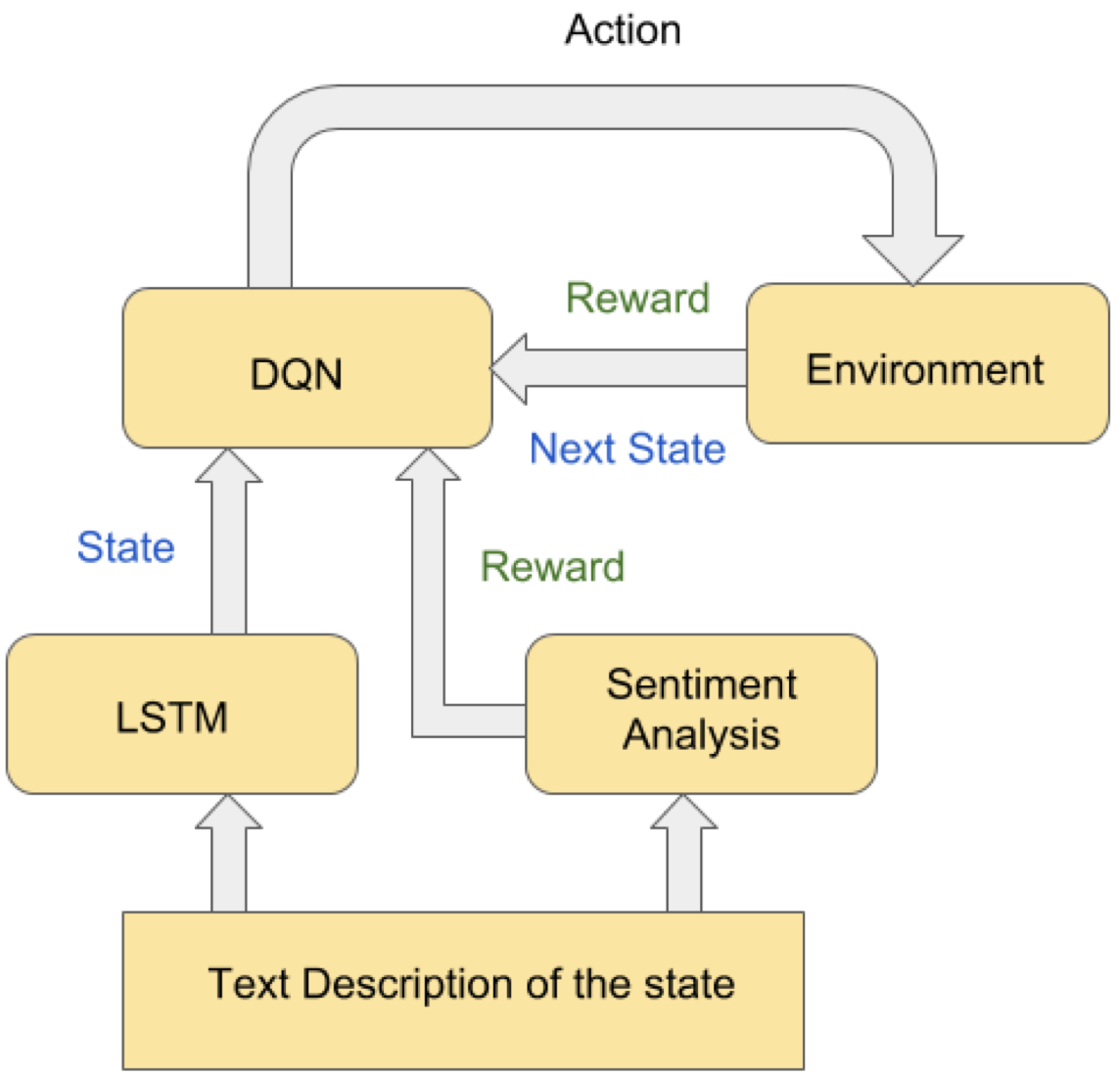

While reinforcement learning (RL) has been successful in natural language processing (NLP) domains such as dialogue generation and text-based games, it typically faces the problem of sparse rewards that leads to slow or no convergence. Traditional methods that use text descriptions to extract only a state representation ignore the feedback inherently present in them. In text-based games, for example, descriptions like "Good Job! You ate the food" indicate progress, and descriptions like "You entered a new room" indicate exploration. Positive and negative cues like these can be converted to rewards through sentiment analysis. This technique converts the sparse reward problem into a dense one, which is easier to solve. Furthermore, this can enable reinforcement learning without rewards, in which the agent learns entirely from these intrinsic sentiment rewards. This framework is similar to intrinsic motivation, where the environment does not necessarily provide the rewards, but the agent analyzes and realizes them by itself. We find that providing dense rewards in text-based games using sentiment analysis improves performance under some conditions.

@article{deshpande2020sentiment, title = {Sentiment Analysis for Reinforcement Learning}, author = {Deshpande, Ameet and Fleisig, Eve}, journal = {arXiv preprint arXiv:2010.02316}, year = {2020} } - CLEVR Parser: A Graph Parser Library for Geometric Learning on Language Grounded Image ScenesRaeid Saqur, and Ameet DeshpandeIn Proceedings of Second Workshop for NLP Open Source Software (NLP-OSS), 2020

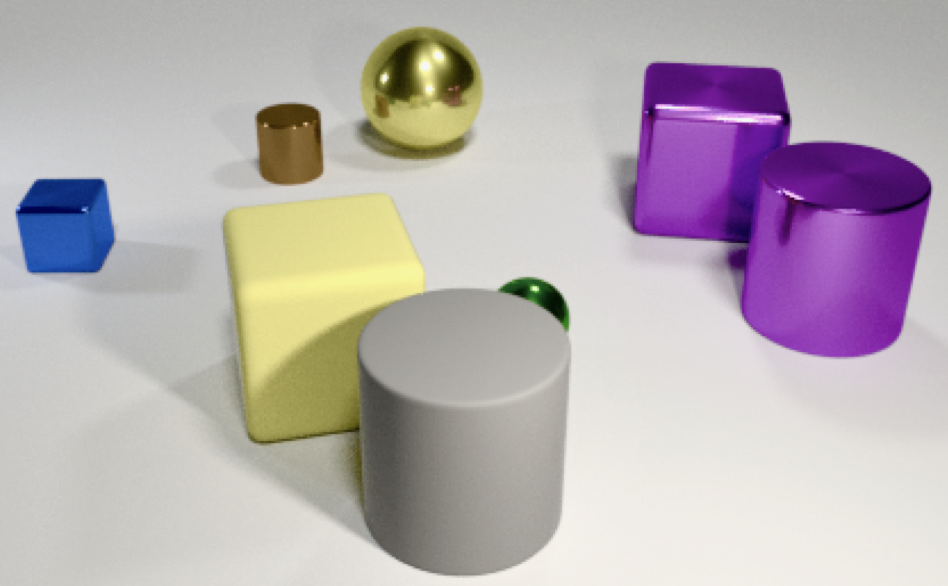

The CLEVR dataset has been used extensively in language grounded visual reasoning in Machine Learning (ML) and Natural Language Processing (NLP) domains. We present a graph parser library for CLEVR, that provides functionalities for object-centric attributes and relationships extraction, and construction of structural graph representations for dual modalities. Structural order-invariant representations enable geometric learning and can aid in downstream tasks like language grounding to vision, robotics, compositionality, interpretability, and computational grammar construction. We provide three extensible main components - parser, embedder, and visualizer that can be tailored to suit specific learning setups. We also provide out-of-the-box functionality for seamless integration with popular deep graph neural network (GNN) libraries. Additionally, we discuss downstream usage and applications of the library, and how it accelerates research for the NLP research community.

@inproceedings{saqur2020clevr, title = {CLEVR Parser: A Graph Parser Library for Geometric Learning on Language Grounded Image Scenes}, author = {Saqur, Raeid and Deshpande, Ameet}, booktitle = {Proceedings of Second Workshop for NLP Open Source Software (NLP-OSS)}, pages = {14--19}, year = {2020} }

2019

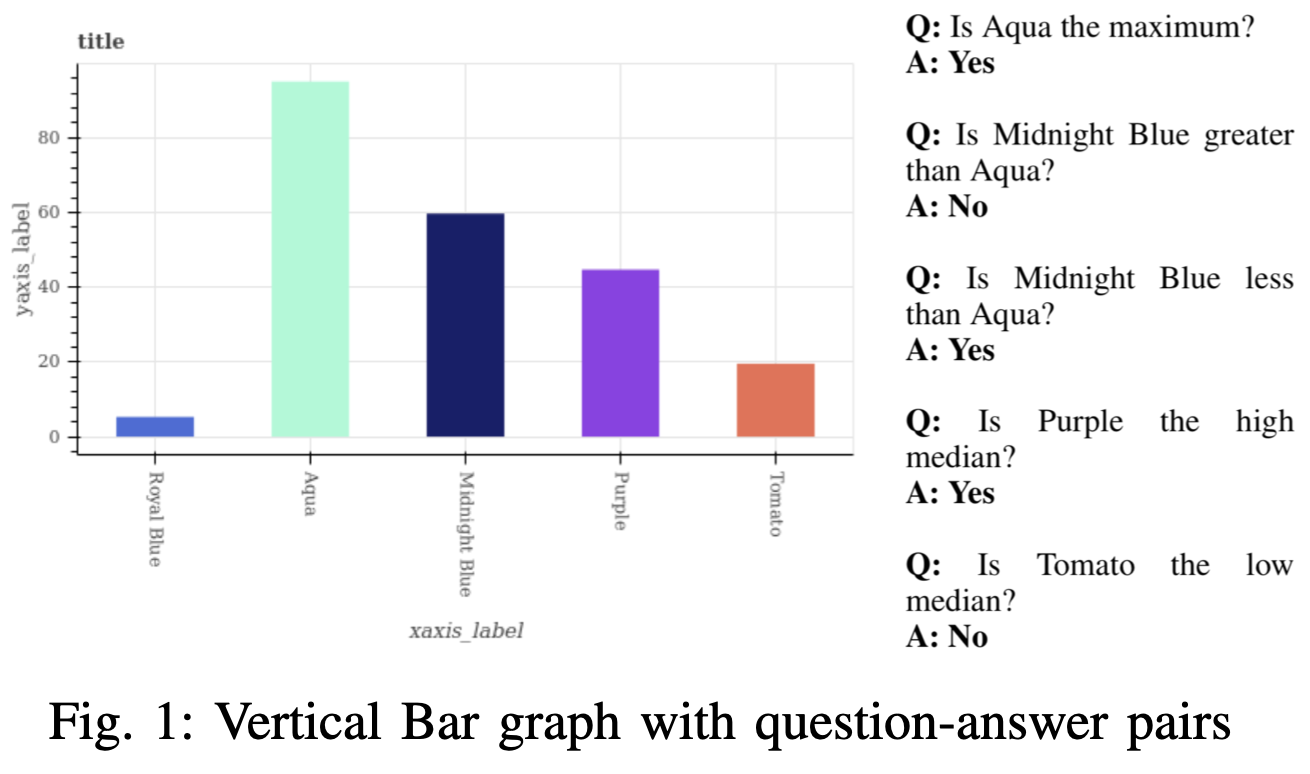

- Figurenet: A deep learning model for question-answering on scientific plotsRevanth Reddy, Rahul Ramesh, Ameet Deshpande, and Mitesh M KhapraIn 2019 International Joint Conference on Neural Networks (IJCNN), 2019

Deep Learning has managed to push boundaries in a wide variety of tasks. One area of interest is to tackle problems in reasoning and understanding, with an aim to emulate human intelligence. In this work, we describe a deep learning model that addresses the reasoning task of question-answering on categorical plots. We introduce a novel architecture FigureNet, that learns to identify various plot elements, quantify the represented values and determine a relative ordering of these statistical values. We test our model on the FigureQA dataset which provides images and accompanying questions for scientific plots like bar graphs and pie charts, augmented with rich annotations. Our approach outperforms the state-of-the-art Relation Networks baseline by approximately 7% on this dataset, with a training time that is over an order of magnitude lesser.

@inproceedings{reddy2019figurenet, title = {Figurenet: A deep learning model for question-answering on scientific plots}, author = {Reddy, Revanth and Ramesh, Rahul and Deshpande, Ameet and Khapra, Mitesh M}, booktitle = {2019 International Joint Conference on Neural Networks (IJCNN)}, pages = {1--8}, year = {2019}, organization = {IEEE} } - Leveraging Ontological Knowledge for Neural Language ModelsAmeet Deshpande, and Monisha JegadeesanIn Proceedings of the ACM India Joint International Conference on Data Science and Management of Data, 2019

Neural Language Models such as Word2Vec and GloVe have been shown to encode semantic relatedness between words. Improvements in unearthing these embeddings can ameliorate performance in numerous downstream applications such as sentiment analysis, question answering, and dialogue generation. Lexical ontologies such as WordNet are known to supply information about semantic similarity rather than relatedness. Further, extracting word embeddings from small corpora is daunting for data-hungry neural networks. This work shows how methods that conflate Word2Vec and Ontologies can achieve better performance, reduce training time and help adapt to domains with a minimum amount of data.

@inproceedings{deshpande2019leveraging, title = {Leveraging Ontological Knowledge for Neural Language Models}, author = {Deshpande, Ameet and Jegadeesan, Monisha}, booktitle = {Proceedings of the ACM India Joint International Conference on Data Science and Management of Data}, pages = {350--353}, year = {2019} }

2018

- Improvements on hindsight learningAmeet Deshpande, Srikanth Sarma, Ashutosh Jha, and Balaraman RavindranarXiv preprint arXiv:1809.06719, 2018

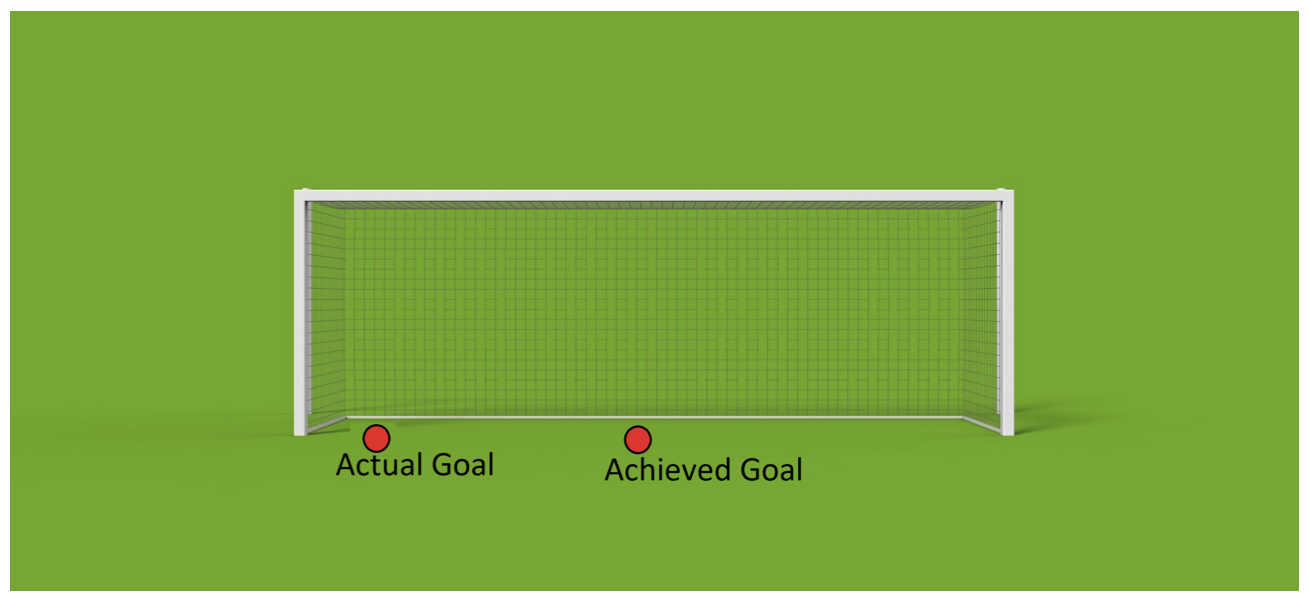

Sparse reward problems are one of the biggest challenges in Reinforcement Learning. Goal-directed tasks are one such sparse reward problems where a reward signal is received only when the goal is reached. One promising way to train an agent to perform goal-directed tasks is to use Hindsight Learning approaches. In these approaches, even when an agent fails to reach the desired goal, the agent learns to reach the goal it achieved instead. Doing this over multiple trajectories while generalizing the policy learned from the achieved goals, the agent learns a goal conditioned policy to reach any goal. One such approach is Hindsight Experience replay which uses an off-policy Reinforcement Learning algorithm to learn a goal conditioned policy. In this approach, a replay of the past transitions happens in a uniformly random fashion. Another approach is to use a Hindsight version of the policy gradients to directly learn a policy. In this work, we discuss different ways to replay past transitions to improve learning in hindsight experience replay focusing on prioritized variants in particular. Also, we implement the Hindsight Policy gradient methods to robotic tasks.

@article{deshpande2018improvements, title = {Improvements on hindsight learning}, author = {Deshpande, Ameet and Sarma, Srikanth and Jha, Ashutosh and Ravindran, Balaraman}, journal = {arXiv preprint arXiv:1809.06719}, year = {2018} } - Discovering hierarchies using Imitation Learning from hierarchy aware policiesAmeet Deshpande, Harshavardhan Kamarthi, and Balaraman RavindranarXiv preprint arXiv:1812.00225, 2018

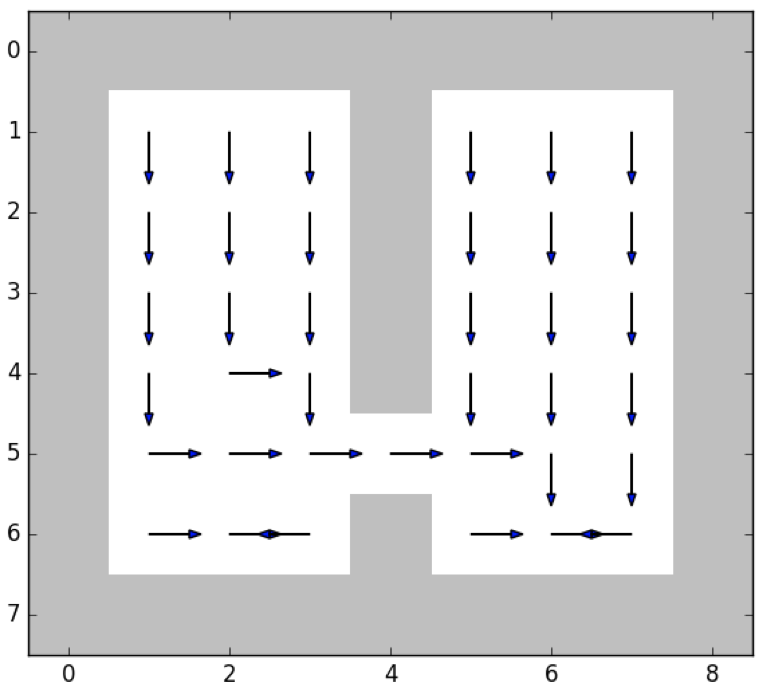

Learning options that allow agents to exhibit temporally higher order behavior has proven to be useful in increasing exploration, reducing sample complexity and for various transfer scenarios. Deep Discovery of Options (DDO) is a generative algorithm that learns a hierarchical policy along with options directly from expert trajectories. We perform a qualitative and quantitative analysis of options inferred from DDO in different domains. To this end, we suggest different value metrics like option termination condition, hinge value function error and KL-Divergence based distance metric to compare different methods. Analyzing the termination condition of the options and number of time steps the options were run revealed that the options were terminating prematurely. We suggest modifications which can be incorporated easily and alleviates the problem of shorter options and a collapse of options to the same mode.

@article{deshpande2018discovering, title = {Discovering hierarchies using Imitation Learning from hierarchy aware policies}, author = {Deshpande, Ameet and Kamarthi, Harshavardhan and Ravindran, Balaraman}, journal = {arXiv preprint arXiv:1812.00225}, year = {2018} }